Table of Contents

- Model Context Protocol

- fastmcp - The Pythonic MCP Package

- MCP via Claude Desktop

- MCP via Client Script & Ngrok

- Conclusion

2025-06-12

An Experience ReportModel Context Protocol Meets OpenAPI

Artificial Intelligence (AI) - especially Large Language Models (LLMs) - are on everyone's lips. Now they should not only generate text, images, video, and audio, but also operate the software we work with as humans. To increase efficiency and make human-machine interfaces more natural. In this blog post, we offer a simple introduction to the Model Context Protocol (MCP) and share our first experiences with it. Additionally, we examine the integration of APIs via the OpenAPI standard through the MCP.

Model Context Protocol

The Model Context Protocol (MCP) is a definition for providing context from applications for LLMs. In simple terms, the protocol contains information about how an LLM can operate software (mostly interfaces).

It stands to reason that existing structures, such as the OpenAPI standard, are used to define an MCP for an application. However, before we bring MCP and OpenAPI together, let's first look at a few details of the MCP.

Communication Model of the MCP

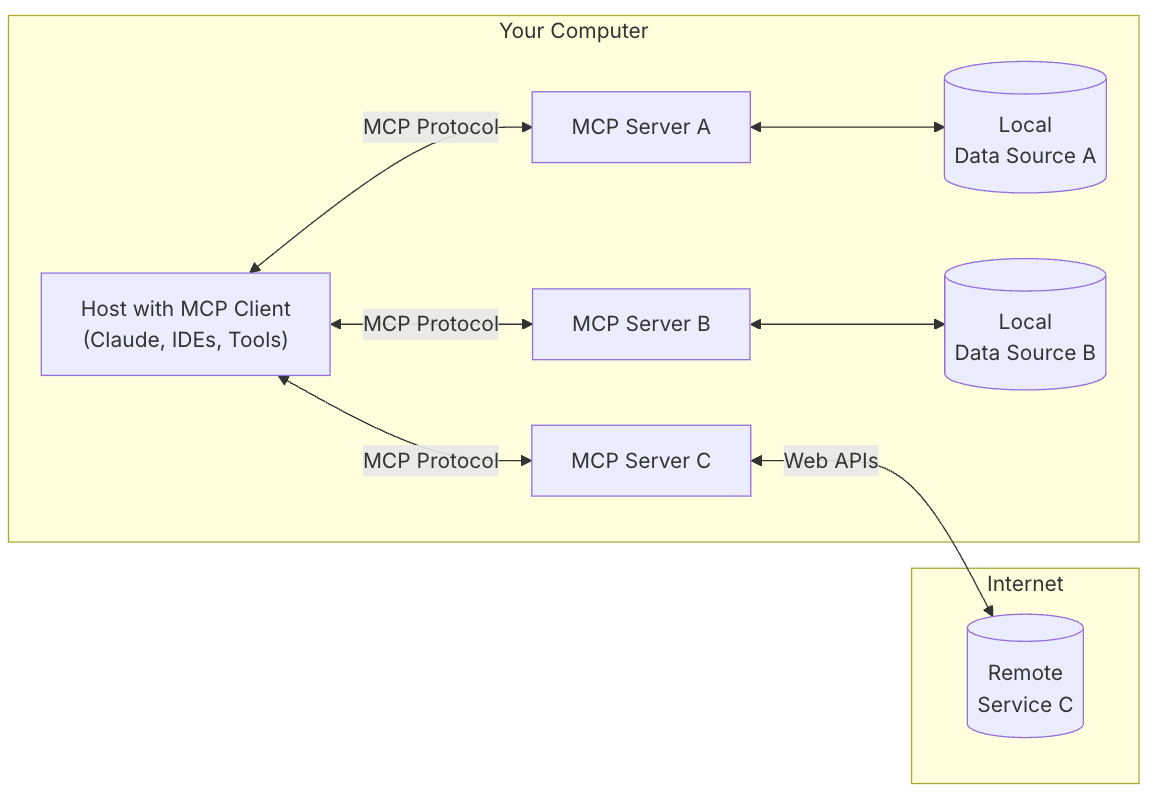

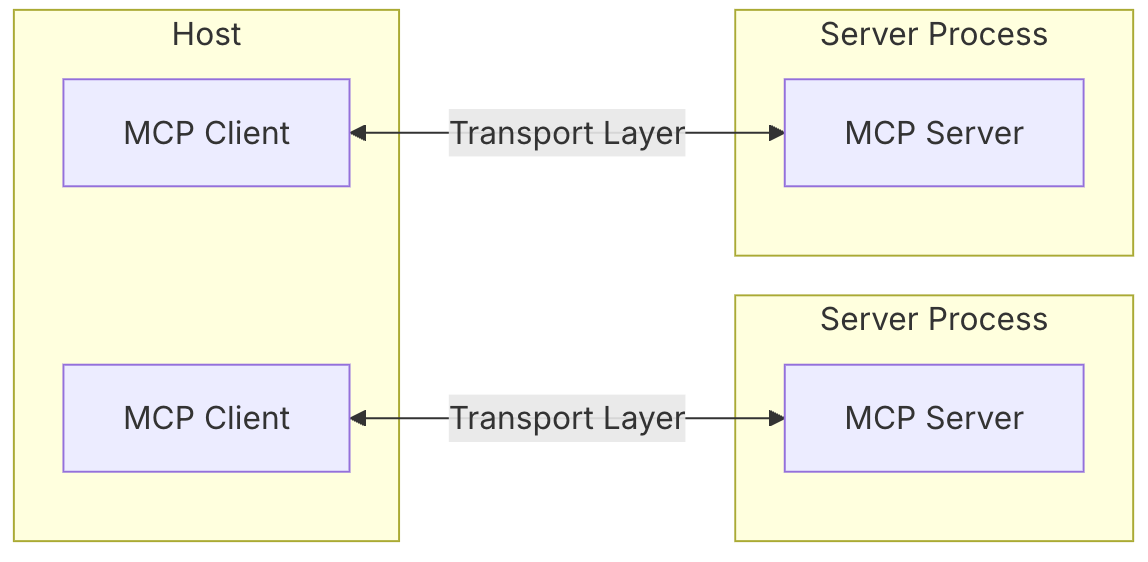

Communication in the MCP framework occurs as a client-server communication. In this case, the client is the LLM, and the server is an abstraction layer of the application to be operated. This layer indicates how the client can operate the underlying application.

The underlying application can be anything - a database/data source, a complex application, or an interface. The MCP host is the program that executes the MCP client (e.g., Claude Desktop, ChatGPT).

Important - the graphic shows bidirectional communication between client and server. This means our MCP server must be accessible from the internet. Locally running MCP Servers require a setup that makes them available for mostly internet-based MCP Clients (e.g., Claude Desktop or Ngrok).

Resources and Tools in the MCP

I don't want to delve too deeply into the complexities of the MCP - all details can be read in the official documentation. Important for the context of this blog post are primarily Resources and Tools.

Resources are simply data sources. They describe how the client (the LLM) can retrieve data from the server. All forms of data are possible: Text, binary data, images, sound, videos, structured or unstructured. For accessing specific resources, Resource Templates are used. These allow access to specific resources - for example, "Give me the purchase order with number X341".

Tools enable actions that allow interaction.This enables the LLMs to perform actions with running services. Creating or manipulating a data record, calculating a task, triggering an order or manipulating an image. Everything is conceivable.

Connection of an LLM and an Application via MCP

With the description of resources and tools, MCP now promises that LLMs can serve applications in a meaningful way and manner. They leverage efficiencies and effectively create a new interface in both machine-to-machine and human-machine communication. Looking into the details of resource and tool descriptions, it becomes apparent that many information used for MCP already exist in standard formats like OpenAPI. The description of structures is JSON-based in both cases. Naturally, the structure differs - at this point, fastmcp comes into play.

In the following section, we have explored the Python package fastmcp and share our insights.

fastmcp - The Pythonic MCP Package

fastmcp is a framework for implementing the MCP protocol, written in Python. Version 1 of the package was incorporated into the official Python SDK. The current version 2, at the time of the blog post, focuses primarily on good usability and a most complete feature set.

Upon reviewing the documentation, it's immediately apparent that there's a dedicated page for OpenAPI integration. The example appears remarkably simple:

import httpx

from fastmcp import FastMCP

# Create an HTTP client for your API

client = httpx.AsyncClient(base_url="https://blueshoe.youtrack.cloud/api/", headers={

"Authorization": "############",

"Accept": "application/json",

})

# Load your OpenAPI spec

openapi_spec = httpx.get("https://blueshoe.youtrack.cloud/api/openapi.json").json()

# Create the MCP server

mcp = FastMCP.from_openapi(

openapi_spec=openapi_spec,

client=client,

name="blueshoe-youtrack",

)

if __name__ == "__main__":

mcp.run()

The server receives a URL with the OpenAPI JSON and a client object for API usage.

My idea: I want to make our ticket system YouTrack, which we use for project management at Blueshoe, controllable via LLM.

This works seamlessly - now how can LLMs communicate with my server? The documentation offers 2 possibilities - Claude Desktop or Ngrok. What are the differences?

MCP Local or Remote?

fastmcp allows the written server to be simply installed in the locally installed Claude Desktop. Communication then occurs via stdio. Alternatively, the server can be bound to a port, with communication then happening via SSE (Server-Sent Events). Ngrok comes into play as an alternative. Ngrok offers simple options to expose local programs to the internet. However, my initial attempts started with Claude Desktop.

MCP via Claude Desktop

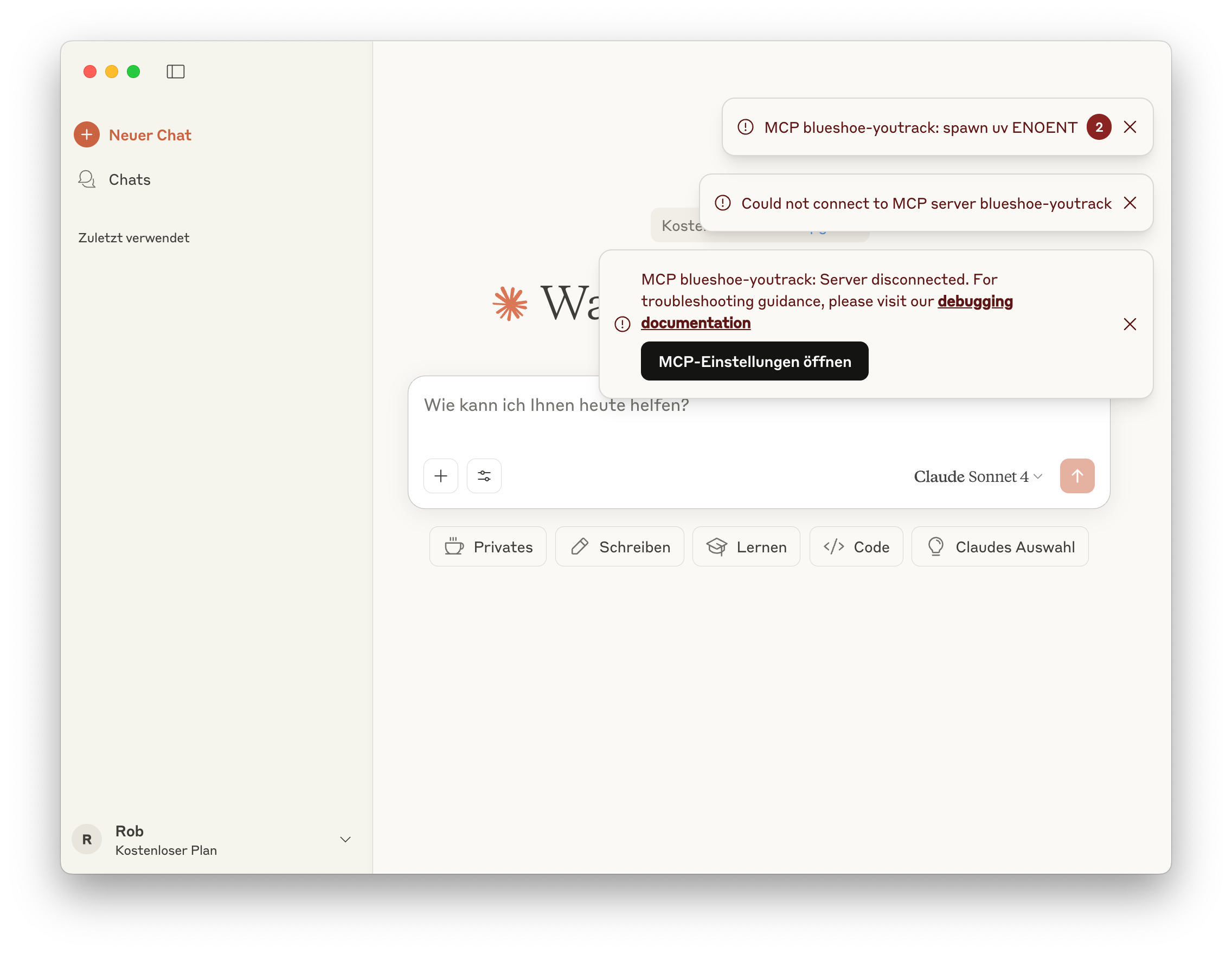

fastmcp comes with a command to install the MCP server in Claude Desktop. Accordingly, I mainly followed the documentation for connecting to Claude Desktop. So let's go:

fastmcp install server.py

When starting Claude Desktop, the following message appeared:

Hmm, okay? What's the problem? I start the server manually. Worked. After a brief investigation, I find: The installation command of fastmcp is not particularly smart:

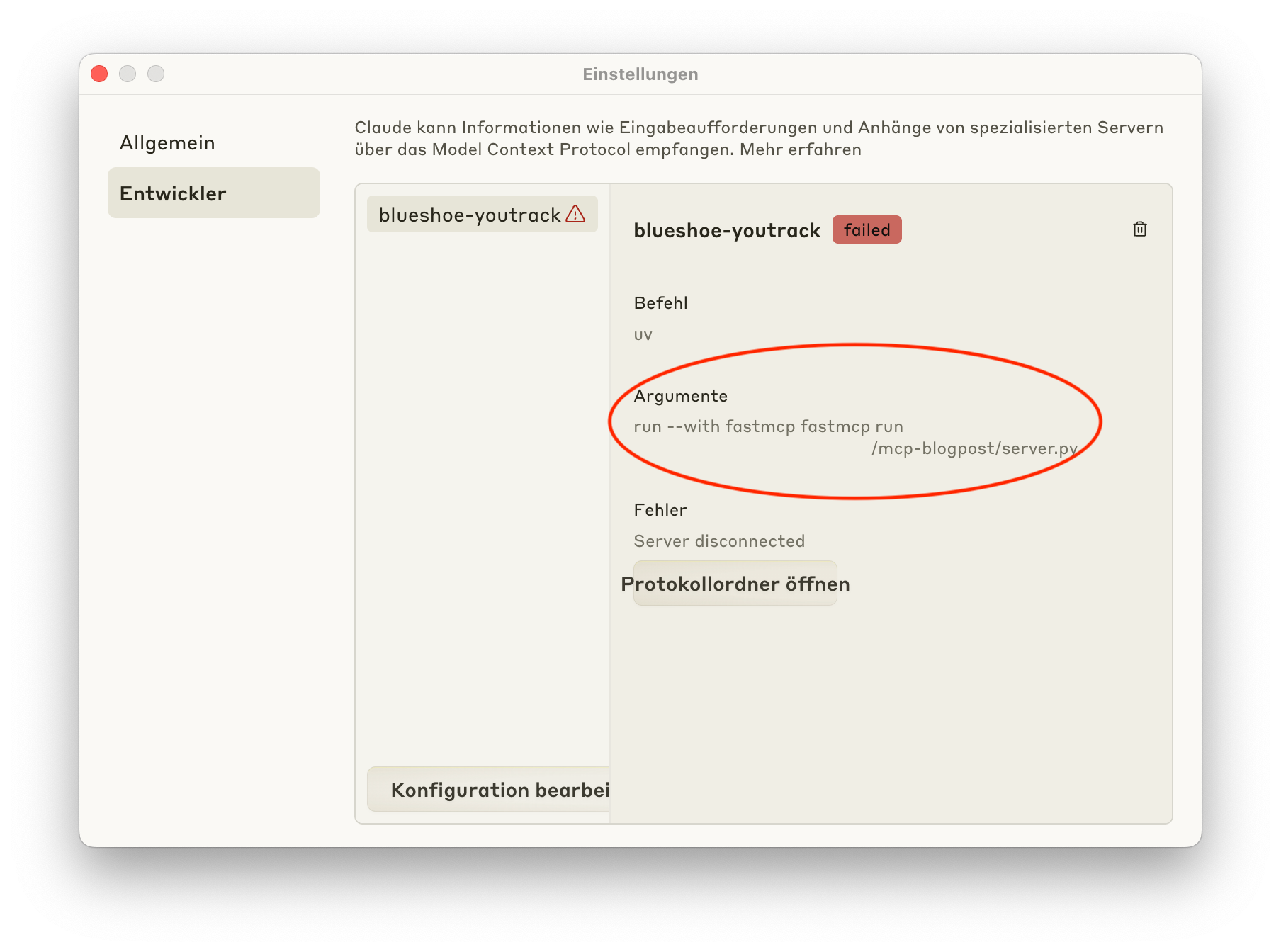

Unfortunately, the installation command does not select the correct Python interpreter. This prevents the script from being executed.

Note: I've cut out a small piece of the path here.

Okay, this can be easily resolved. In my project directory, I perform the following.

Command from:

which python3

I will then place this interpreter instead of uv in the configuration file of Claude Desktop. As a parameter, only the path to server.py will be entered.

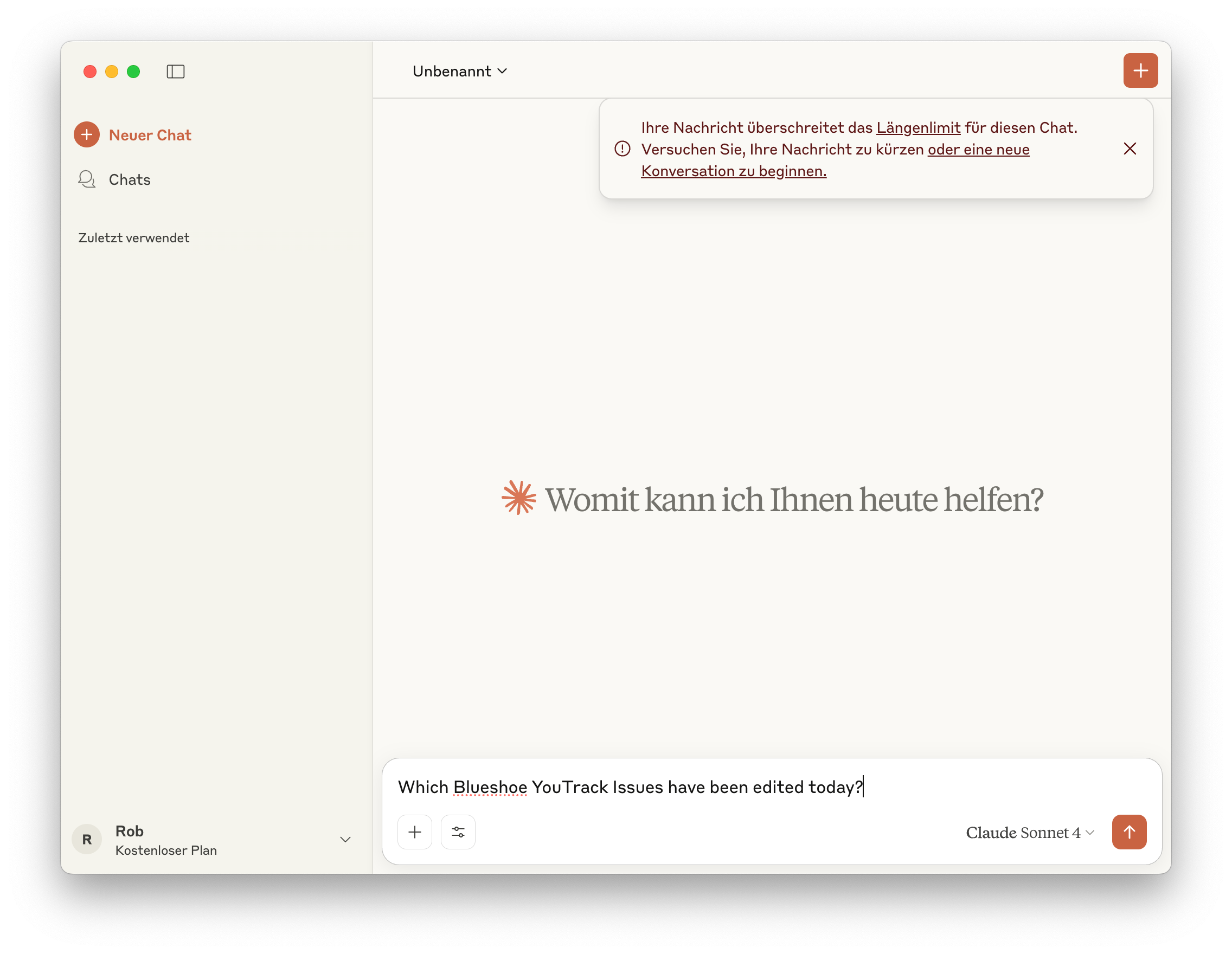

Problem solved! Claude Desktop starts without problems. I'm asking a question - which tickets were processed today?

Ah yes. Crap! It turns out that such a rather extensive OpenAPI specification directly exceeds the length limits. Extensive suggests a pretty good description. I have a good opinion of Jetbrains and their products, so I was hoping that the input from the YouTrack OpenAPI would be ideal for my experiment.

I have tested further models, including paid variants. Unfortunately, I often run into limitations of tokens or rate limits. My personal impression at this point was powerlessness. Everything is easily put together, but how can I get to the bottom of the problems? I then did debugging my server script via print, not a particularly neat experience.

MCP via Client Script & Ngrok

Okay, end of UIs - then we'll script the client.

import anthropic

from rich import print

# Your server URL (replace with your actual URL)

url = 'https://############.ngrok-free.app'

client = anthropic.Anthropic()

response = client.beta.messages.create(

model="claude-3-5-haiku-20241022",

max_tokens=1000,

messages=[{"role": "user", "content": "What issues have been edited on Blueshoe YouTrack today?"}],

mcp_servers=[

{

"type": "url",

"url": f"{url}/sse",

"name": "youtrack",

}

],

extra_headers={

"anthropic-beta": "mcp-client-2025-04-04"

}

)

print(response.content)

With ngrok we make our server available on the internet:

ngrok http 8000

The client script gets the corresponding address and our message. What was processed today in our YouTrack?

Some interesting outputs in the log:

BetaMCPToolUseBlock(

id='mcptool_01JqQroumE3gHkVnYFZzRc7C',

input={'query': 'updated: today'},

name='POST_searchassist',

server_name='youtrack',

type='mcp_tool_use'

)

Aha - a query updated: today - that looks good, right?

Unfortunately no - the final answer looks roughly like this:

I apologize for the persistent errors. It seems there might be an issue with the YouTrack API tools at the moment. Without being able to directly query the system, I can provide some general advice: To find issues edited today in YouTrack, you would typically use a search query like "updated: today" in the YouTrack interface.

Just use the YouTrack UI! Unfortunately, the LLM didn't come to a good conclusion when using the YouTrack API. After initially running into authentication problems, which I was able to resolve, the LLM actually had a clear path. Unfortunately, it was not able to formulate a sensible API query. Even seemingly simple queries like - How many tickets were processed today? (we just want "only" a number) - did not function.

Conclusion

The Model Context Protocol is a good systematization for communication between LLMs and existing systems. However, it does not mean that database or application integration is completed with a simple handshake. Even excellent integration points with OpenAPI schemas cannot be integrated without further ado - it requires corresponding fine-tuning so that an LLM can deliver good results.

Best Practices for creating MCP structures are already available. An OpenAPI schema can form the basis for integration - but this does not replace the implementation.

MCP is not yet plug'n'play. It also requires good engineering and clear goals that will be pursued with an integration. I will continue to follow this topic, probably also in a further blog post.

One question that I, as a software project author, ask myself - how does one actually reliably test these integrations? The documentation from fastmcp appears somewhat brief in the Testing area. If you know something about this or have insights, please share in the comments.