Table of Contents

- Why Django-Celery?

- How to set up Celery

- This is what Celery tasks look like in Django

- Periodic tasks with django-celery-beat

- Celery in production

- Frequent stumbling blocks

- Best practices for clean tasks

- Conclusion

Meet the Author

2025-07-15

Everything you need to know about Django-CeleryMastering Django Celery: Managing asynchronous tasks like a pro

Integrating Celery into your Django application enables powerful, asynchronous task processing. Learn how to effectively set up Django-Celery and optimise it for production environments to make your application stable and performant.

Have you ever experienced this? An order comes in - and suddenly your Django app hangs because emails are being sent or PDFs are being generated? This is where Django Celery comes into play. The integration into your Django application enables powerful, asynchronous task processing. Learn how to effectively set up Django-Celery and optimise it for production environments to make your application stable and performant.

Why Django-Celery?

Many tasks in web applications do not need to be processed synchronously. These include, for example, sending emails, generating PDFs or processing large amounts of data. This is exactly where Celery comes into play: it allows you to perform these tasks in the background (asynchronously).

Typical use cases for Celery in Django:

- Sending emails

- Image processing

- External API calls

- Database cleanup or analysis

- Recurring tasks (e.g. report generation with celery beat)

How to set up Celery

1. installation of the required packages

pip install celery redis

If you need periodic tasks:

pip install django-celery-beat

2. Prepare project structure

Create a file celery.py in your project folder:

# myproject/celery.py

import os

from celery import Celery

os.environ.setdefault("DJANGO_SETTINGS_MODULE", "myproject.settings")

app = Celery("myproject")

app.config_from_object("django.conf:settings", namespace="CELERY")

app.autodiscover_tasks()

In __init__.py:

from .celery import app as celery_app

__all__ = ["celery_app"]

3. Example configuration in settings.py

CELERY_BROKER_URL = "redis://localhost:6379/0"

CELERY_RESULT_BACKEND = "redis://localhost:6379/0"

CELERY_ACCEPT_CONTENT = ["json"]

CELERY_TASK_SERIALIZER = "json"

Test your setup with a simple task.

This is what Celery tasks look like in Django

1. Simple task

@shared_task

def send_email_to_user(user_id):

print(f"E-Mail to user {user_id} sent")

This is now called up with :

send_email_to_user.delay(user.id)

.delay() vs. .apply_async()

.delay()is a shortcut for.apply_async()with standard parameters.- With

.apply_async()you can usecountdown,etaorretry, for example.

2. More complex tasks with transactions and logging

@shared_task

def run_customer_basket_groups_processing(basket_id):

from shop.order.processing import BasketProcessor

from shop.models import Basket

from shop.exceptions import ReachedAdvertisingMediumQuotaWarning

from django.db import transaction

import logging

logger = logging.getLogger(__name__)

basket = Basket.objects.get(pk=basket_id)

try:

if basket.user.groups.exists():

with transaction.atomic():

for cbg in basket.customerbasketgroups.all():

user = cbg.consignee.user

customer_basket = Basket.objects.create(

user=user,

field_staff=basket.user,

discount=basket.discount,

discount_code=basket.discount_code,

shipment_options=basket.shipment_options

)

customer_basket.add_basket_lines_to_basket(

cbg.basket_lines, as_stock_order=True, check_quotas=False

)

basket_processor = BasketProcessor(basket=customer_basket)

basket_processor.process_basket()

except Exception as e:

logger.error(f"Error when processing basket {basket_id}: {e}")

transaction.atomic() ensures that no half-finished order is created in the event of an error.

Periodic tasks with django-celery-beat

Migrations:

python manage.py migrate

Activate app:

INSTALLED_APPS += ["django_celery_beat"]

Create example task:

from django_celery_beat.models import PeriodicTask, IntervalSchedule

schedule, _ = IntervalSchedule.objects.get_or_create(every=10, period=IntervalSchedule.SECONDS)

PeriodicTask.objects.create(interval=schedule, name="Example Task", task="myapp.tasks.send_email_to_user")

Done! Your first periodic task has been created. This means: Celery calls up the task at this interval - for example, to send a notification or reminder email at regular intervals. This configuration is particularly useful for recurring tasks that are to be triggered on a time-controlled basis.

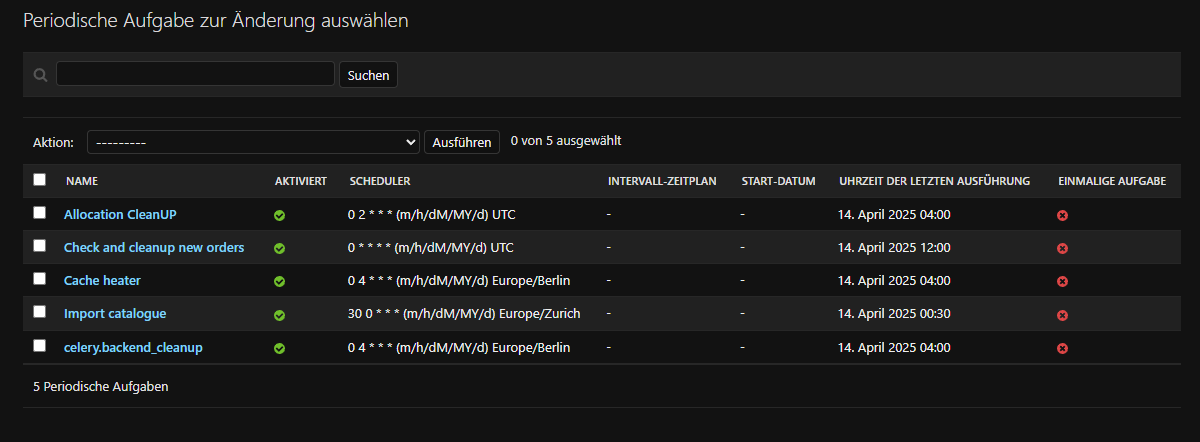

The admin interface then shows you all the tasks set up with status and schedule - ideal for monitoring and management in live operation.

Celery in production

1. Start Worker

celery -A myproject worker -l info

2. Start 'Beat'

celery -A myproject beat -l info

3. Use deployment tools

- Use Supervisor or systemd for process management.

- Log errors e.g. with Sentry or external tools.

- Implement retries for temporary errors.

Frequent stumbling blocks

- Redis not started →

ConnectionRefusedError .autodiscover_tasks()forgotten → Tasks are not found- Task hangs → Worker not started or deadlock in database

Best practices for clean tasks

- Keep tasks small, fast and repeatable

- Use logging (e.g.

logger.info(),logger.error()) - Plan timeouts and retry logic

- Use

transaction.atomic()for database actions - Use

.apply_async(countdown=...)for scheduled execution

Conclusion

Celery is a powerful tool that can make your Django app more flexible, faster and more robust. Whether simple email tasks or complex order processing - with the right setup and a few best practices, you'll be on the safe side.

Get started now - and bring Django Celery to your app!