Table of Contents

- Introduction

- Commonalities

- Differences

- Performance Comparison

- Project Structure and Developer Workflow

- Deployment in Practice: From Local to Live

- Error Handling and Debugging

- When which framework?

- Conclusion

- FAQ – Frequently Asked Questions about Choosing the Right Framework

Meet the Author

2025-06-03

Framework ComparisonFastAPI vs. Robyn: A Detailed Comparison

In the world of modern API development, developers are often faced with the question: Which framework is right for my project? FastAPI and Robyn are two of the (more or less) rising stars in Python API development. Both offer modern features and high performance, but differ in several important aspects. In this article, we will explore these differences. We take a detailed look at the commonalities and differences between these two frameworks.

Introduction

Choosing the right API framework is crucial for the success of a project. While FastAPI has been established for several years, Robyn is gaining popularity as a newer player. Both frameworks promise high performance and modern development approaches, but they differ in their implementation and strengths. In this article, we will compare the most important aspects of both frameworks and help you make the right choice for your project.

Commonalities

FastAPI and Robyn share some fundamental characteristics that make them modern and efficient API frameworks:

- Both are modern, asynchronous web frameworks

- They offer high performance through asynchronous processing

- Both support OpenAPI/Swagger documentation

- They use modern Python features like Type Hints

- Both are lightweight and modular in design

Differences

Runtime

FastAPI is based on Starlette and Uvicorn as ASGI server, while Robyn has implemented its own runtime in Rust. This leads to interesting differences: FastAPI benefits from the mature Python ecosystem integration and broad community support, while Robyn potentially offers better performance for certain workloads through its Rust implementation. However, the Rust implementation also means Robyn is less flexible when integrating Python libraries and might require more maintenance.

Endpoint Handling

While both frameworks pursue similar goals, they differ in their endpoint implementation:

FastAPI uses a decorator-based approach with a strong focus on typing and validation, as well as request destructuring and injection:

from fastapi import FastAPI

from pydantic import BaseModel

app = FastAPI()

class Item(BaseModel):

id: str

name: str

price: float

@app.post("/items/")

async def create_item(item: Item):

# create item in db

# ...

return item

@app.put("/items/{item_id}")

async def update_item(item_id: str, item: Item):

"""

A client would call me like: PUT {base_url}/items/0485fd43-1345-4336-877c-4b4775810

And `item_id` is automatically made available to this endpoint!

"""

# update item in db

# ...

return item

@app.put("/items/{item_id}/move")

async def move_item(item_id: str, item: Item, directory: str | None = None):

"""

A client would call me like: PUT {base_url}/items/0485fd43-1345-4336-877c-4b4775810/move?directory=new-n-shiny

And `directory` is automatically made available to this endpoint!

"""

# move item

# ...

return item

Robyn, on the other hand, offers a more flexible, in my opinion more circumstantial approach to path and query parameter injection:

# Why import types from three different modules?

from robyn import Robyn, Request

from robyn.types import PathParams

from robyn.robyn import QueryParams

app = Robyn(__file__)

@app.post("/items/")

async def create_item(request: Request):

data = await request.json()

return data

@app.put("/items/:item_id")

async def update_item(

request: Request,

path_parameters: PathParams, # NOTE: variable name has to be `path_parameters` for injection to work

):

item_id: str = str(path_parameters["item_id"])

# update item in db

# ...

return item

@app.put("/items/:item_id")

async def move_item(

request: Request,

path_parameters: PathParams,

query_parameters: QueryParams, # NOTE: variable name has to be `query_parameters` for injection to work

):

item_id: str = str(path_parameters["item_id"])

directory: str = query_parameters.get("directory", None)

# move item

# ...

return item

Path and Query parameters are also available in the Request Model. So one can theoretically save the injection.

ORM Support

Database operation support is an important aspect of framework selection:

FastAPI:

- Allows easy integration with ORMs like SQLAlchemy – thanks to Pydantic

- Supports asynchronous ORMs like Tortoise ORM

- Has an active community with numerous examples and best practices for ORM usage

- Automatically generates OpenAPI documentation based on database models

- Offers SQLModel – its own ORM built on SQLAlchemy and Pydantic

Robyn:

- More flexible in the choice of database solutions (including Rust ORMs!)

- Fewer predefined patterns for database operations

Serialization and Validation

The way data is validated and serialized differs significantly:

FastAPI:

- Uses Pydantic for validation and serialization

- Strict typing and validation

- Automatic generation of OpenAPI schemas

- Extensive validation options (through Pydantic)

Robyn:

- More flexible validation options

- Less strict typing

- Manual serialization/deserialization (for example via

jsonify) Even when this is not necessarily required. Robyn's documentation is not 100% clear here: (

(jsonifyis imported but not used; In the test, one didn't even have to importjsonify, a dict as response is completely sufficient)

FFIs

At least when using CPython, one can already freely convert certain parts of their Python code to C / C++ (if not already done; stdlib, etc.) to accelerate. Since Robyn has a Rust runtime, this framework allows a child's play integration of Rust code!

For our small performance comparison, I'll generate Fibonacci numbers up to a (maximum) 30-element size. No tricks like memoization are used to show raw Python performance:

def py_fib(n: int):

if n < 2:

return n

return py_fib(n - 1) + py_fib(n - 2)

I've also (crude, and without recursion) implemented this "Generator" in Rust:

//rustimport:pyo3

//:

//: [dependencies]

//: num-bigint = "0.4"

//: num-traits = "0.2"

use pyo3::prelude::*;

use num_bigint::BigUint;

use num_traits::{Zero, One};

#[pyfunction]

fn fibonacci(term: u64) -> PyResult<String> {

if term == 0 {

return Ok("0".to_string());

}

let (mut a, mut b): (BigUint, BigUint) = (Zero::zero(), One::one());

for i in 1..=term {

let temp = b.clone();

b = a + b;

a = temp;

}

Ok(b.to_string())

}

Child's play, as I only had to worry about the implementation. Dependencies can be defined via comments and are automatically resolved by the Robyn CLI.

After writing one's Rust code, the following command suffices:

robyn --compile-rust-path "my-robyn-rust-dir"

And just like magic (via PyO3), the Rust code is compiled and packed into a platform-independent library!

This can then be imported like a normal Python module (my Rust file is called native_fib.rs and is in the same directory as the Robyn main.py):

from native_fib import fibonacci as rs_fibonnacci

Performance Comparison

As already mentioned, for comparing performance, the generation of Fibonacci numbers and the following setup is used:

- An endpoint

/which returns a simple JSON:# fastapi implementation @app.get("/") async def root(): return {"response": "success"} # robyn implementation @app.get("/") async def root(request: Request): return {"response": "success"} - A

locustconfiguration file with a task:- Calls

/with 10, 1000 and 10,000 users

- Calls

- An endpoint

/fibonacci/:sizewith optional query parameteruse_native - A

locustconfiguration file with three tasks:- Call

/fibonacci/10; with weighting 3 - Call

/fibonacci/20; with weighting 2 - Call

/fibonacci/30; with weighting - Distributed over 100 users with 1 ramp user per second

- Call

- A

locustconfiguration file with three tasks (for the Rust implementation):- Call

/fibonacci/10?use_native=true; with weighting 3 - Call

/fibonacci/20?use_native=true; with weighting 2 - Call

/fibonacci/30?use_native=true; with weighting - Distributed over 100 users with 1 ramp user per second

- Call

Generally, 1 process and 1 worker were tested.

Test Machine

Lenovo ThinkPad P14s Gen 2i

- Processor: 11th Gen Intel® Core™ i7-1165G7

- RAM Size: 32GiB

Then let's look at the results!

Simple

This load test calls the very simple JSON response endpoint and increases user numbers steadily until a maximum of 10,000 users.

As can be recognized, FastAPI (at least on my laptop) is already reaching its limits with 246.4 Requests per second. So more errors occur where locust requests are no longer (or too late) processed.

ConnectionResetError? - Or when the peer doesn't like you anymore

A ConnectionResetError is a network error that occurs when the counterparty (in this case the server) abruptly terminates an existing TCP connection, typically by sending an RST packet (Reset). This is not an orderly termination (like a FIN packet) and often signals that something unexpected has happened or the server can no longer process the connection.

Under high load, a server can be overwhelmed. This can have various causes: exhaustion of system resources (such as CPU, memory, file descriptors) or overloading of the event loop responsible for processing asynchronous operations. If the event loop cannot quickly enough accept new connections or process data on existing sockets, timeouts can occur or the server must forcibly close connections to free resources or prevent overload.

In the context of Python and ASGI servers like Uvicorn (used by FastAPI), the way Python handles network I/O plays a role. Although Python with asyncio and ASGI enables asynchronous operations and theoretically can manage many connections simultaneously while waiting on I/O, there are limits. The execution of Python code itself is subject to the Global Interpreter Lock (GIL), which prevents multiple native threads from simultaneously executing Python bytecode on multiple CPU cores. In very high load scenarios, this becomes a bottleneck.

Last, when the I/O-bound endpoints are (like simple JSON responses), the sheer amount of context switches, the scheduling of coroutines, and the short time each request spends in Python code (even if it's just JSON serialization) can lead to the Event Loop being overwhelmed. The server process spends too much time executing Python code (even when asynchronous), to react quickly enough to new or existing socket events.

The ConnectionResetErrors in this test show that FastAPI (or the underlying Python/Uvicorn layer) already reaches its limits quite early. The Event Loop cannot process incoming requests quickly enough, which leads to the server resetting connections instead of serving them in an orderly manner. This is an indicator that the Python runtime becomes a bottleneck under this specific extreme load. While Robyn, which uses a Rust runtime without GIL, should be better at navigating this type of bottleneck when processing network connections.

The Load Test Result for Robyn looks significantly more performant:

What do we see? Not only no failures (as all requests could be processed) but also faster response times through the bank! Exciting, but now to the load tests presenting a greater challenge.

Fibonacci

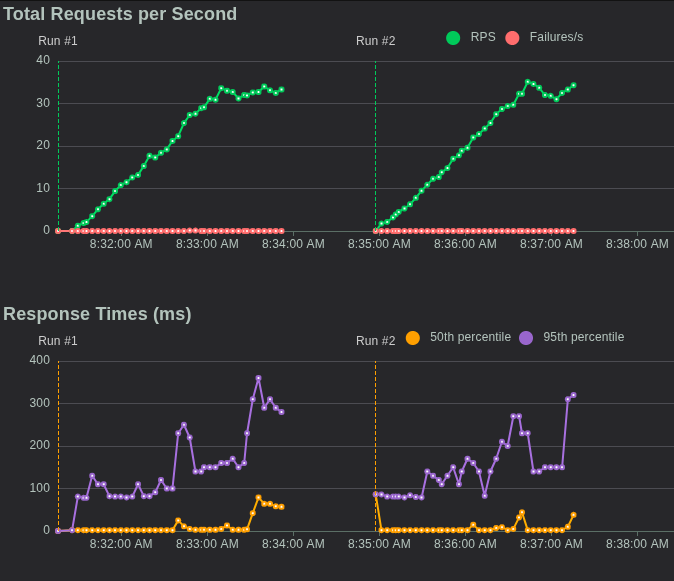

Here directly the results (left FastAPI, right Robyn):

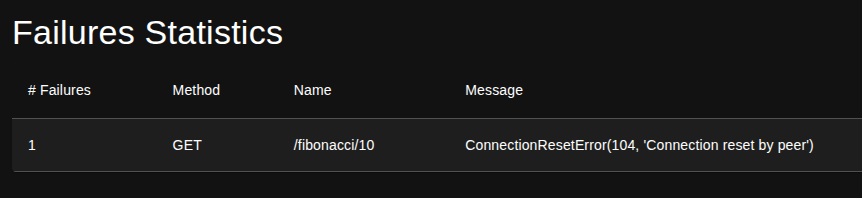

As we can see, Robyn is a few milliseconds faster in response times. Also, under FastAPI a single request failed:

Interestingly, this occurred between 25 and 27 Requests per Second (RPS) and then didn't reappear. This could have been an isolated incident and might be attributable to my not specifically configured API hosting hardware.

But: Robyn also supports native Rust code. How does that look?

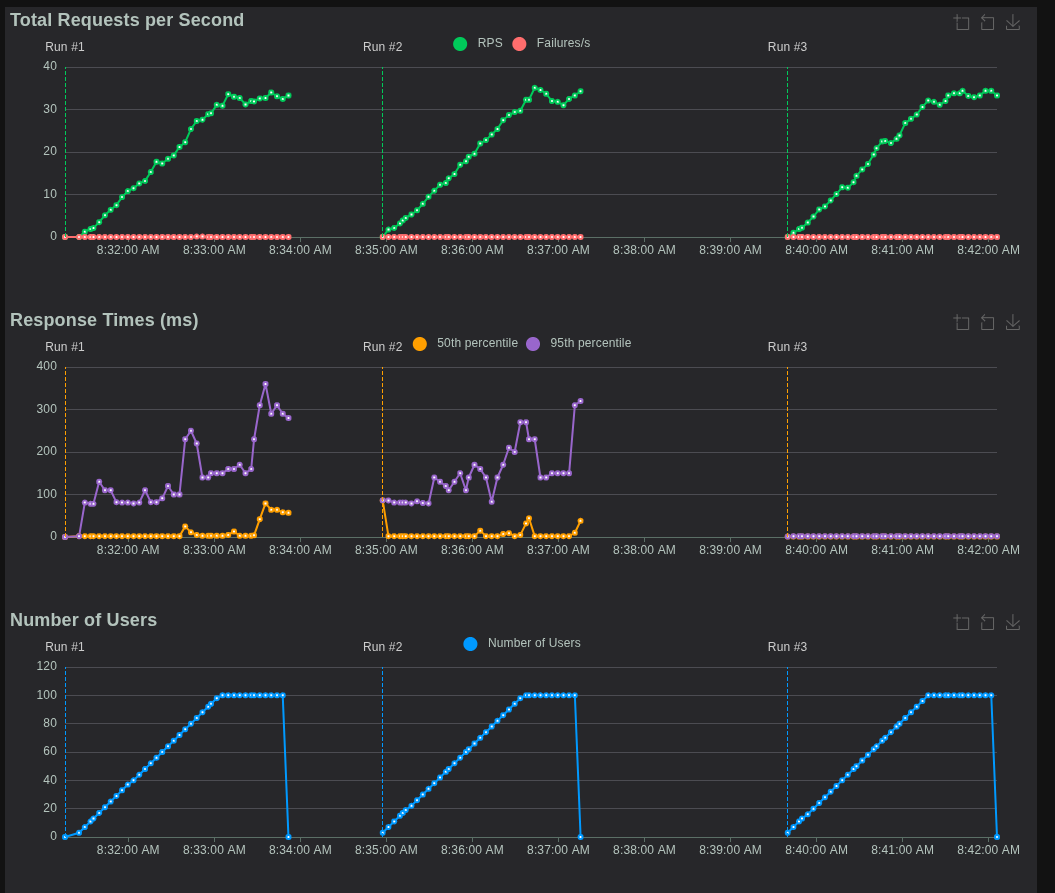

The result? Drastic.

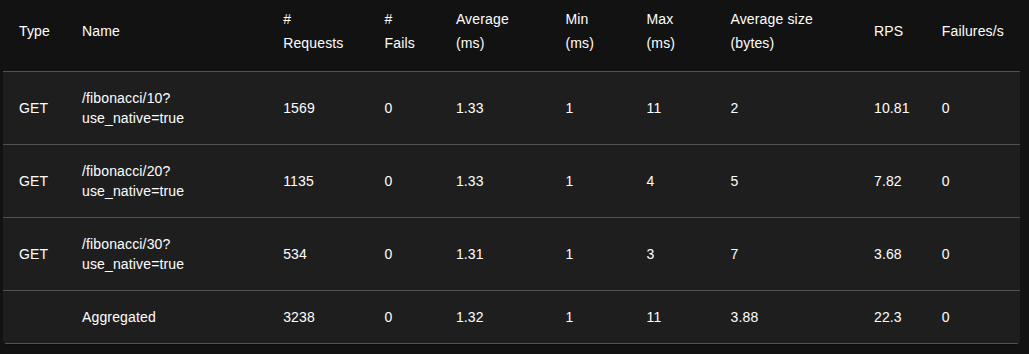

Joking aside, as we can see the response times in the (long unoptimised) Rust implementation are almost negligible. Here is the locust summary:

Here's the case: Requiring a larger Fibonacci number seems to need less time on average and maximum? A possible explanation could be that the "handover" from Python to Rust (and back from Rust to Python) takes most of the time.

Summary

We tested FastAPI and Robyn with a simple JSON endpoint and a more complex Fibonacci endpoint under load.

- FastAPI reaches about 246 RPS on my laptop, then increasingly shows errors.

- Robyn remains stable even at 10,000 users - faster and without errors.

- In Fibonacci calculation (Python), Robyn is a few milliseconds faster, while FastAPI shows a disruption at 25-27 RPS.

- With Rust-native code in Robyn, we see a drastic improvement in response times - even less time with larger Fibonacci numbers!

Conclusion: Robyn scales better than FastAPI, especially under high load. And Rust impressively shows how much more performance is possible when we eliminate Python bottlenecks.

Project Structure and Developer Workflow

How do you organize a project with FastAPI compared to Robyn?

- FastAPI Project Structure:

- Typically modular, often separated by features or domains (e.g.,

routers/,models/,services/). - Heavily relies on the Python ecosystem for tooling:

blackfor formatting,pytestfor testing,alembicfor database migrations. - The

main.pyis often just the entry point that brings together various routers and configurations.

- Typically modular, often separated by features or domains (e.g.,

- Robyn Project Structure:

- Often more monolithic since the framework is less opinionated. Many developers start with a single

app.py. - Rust integration requires a clear separation between Python and Rust code (e.g., in a

native/directory). - Robyn comes with its own CLI for compiling Rust code and starting the application, which slightly changes the workflow.

- Often more monolithic since the framework is less opinionated. Many developers start with a single

- Development Server:

- FastAPI: Uses

fastapi dev main.pyfor a fast hot-reload development server. - Robyn: Offers a built-in hot-reload server with

robyn --devthat can also detect and recompile Rust code changes.

- FastAPI: Uses

Deployment in Practice: From Local to Live

- Containerization (Docker):

- FastAPI: The

Dockerfileis straightforward. It's based on a Python image and starts the application with thefastapi-cli(which calls uvicorn internally).FROM python:3.11-slim WORKDIR /app COPY requirements.txt . RUN pip install --no-cache-dir -r requirements.txt COPY . . CMD ["fastapi", "run", "main.py", "--host", "0.0.0.0", "--port", "80"] - Robyn: The

Dockerfileis more complex when Rust code is involved. It often requires a multi-stage build: one stage to install the Rust toolchain and compile the code, and a second stage to copy the compiled library into a slim Python image.# Stage 1: Build Rust FROM rust:latest as builder WORKDIR /app COPY native/ . # Rust compilation step would go here # Stage 2: Final Image FROM python:3.11-slim WORKDIR /app COPY --from=builder /app/target/release/libnative_fib.so . # ... Rest of the setup

- FastAPI: The

- Production Server:

- FastAPI: Runs on any ASGI-compatible server (Uvicorn, Hypercorn, Daphne).

- Robyn: Comes with its own Rust-based server runtime and doesn't require a separate ASGI server.

Error Handling and Debugging

- FastAPI:

- Offers a robust system for exception handlers. You can define global handlers for

HTTPExceptionor custom exceptions. - Pydantic validation errors are extremely detailed and specify exactly which field in the request body is invalid.

- Debugging follows standard Python debugging practices.

- Offers a robust system for exception handlers. You can define global handlers for

- Robyn:

- Also provides exception handlers, though they are less structured than in FastAPI.

- Debugging can be more complex when errors occur in the Rust runtime or at the Python-Rust interface, potentially requiring knowledge of both languages.

When which framework?

The choice between FastAPI and Robyn depends on your project's specific requirements:

FastAPI is ideal for:

- Projects with complex data classes

- Teams who prefer strict typing

- Applications with extensive API documentation requirements

- Projects with an established community and ecosystem

Many Resources Benefit

Robyn is better suited for:

- Simpler API projects

- Teams wanting more flexibility in implementation

- Projects with specific performance requirements that can be "fine-tuned" with native Rust integration and runtime

Conclusion

Both FastAPI and Robyn are modern, high-performance API frameworks with different strengths. FastAPI offers a more comprehensive solution with strong typing and validation, while Robyn provides more flexibility and a minimalistic approach. The final choice should depend on the specific requirements of your project.

FAQ – Frequently Asked Questions about Choosing the Right Framework

Which framework offers better performance?

Robyn shows better results in performance tests, especially for simple endpoints and high load. However, FastAPI offers a more mature ecosystem integration and community support.

Can I use Rust code in both frameworks?

Robyn offers native Rust code integration through its Rust runtime, while FastAPI does not directly support this. With FastAPI, one would need to rely on traditional CPython FFIs.

Which framework is better suited for complex data models?

FastAPI is better suited for complex data models, as it uses Pydantic for validation and serialization and offers strict typing. Robyn provides more flexibility but fewer predefined structures.

How do the ORM possibilities differ?

FastAPI offers native integration with SQLAlchemy, Tortoise ORM, and SQLModel. Robyn has no native ORM integration but allows using Rust ORMs and provides more flexibility in database selection.

For what type of projects is each framework better suited?

FastAPI is better for complex projects with extensive API documentation requirements and strict typing. Robyn is ideal for simpler API projects and teams wanting more flexibility and native Rust integration.